Collaborative Feature Scoring

For companies that deliver 10-30 features per quarter across a handful of development teams, PMs will have different intuitions about what matters. Using a structured approach to align their intuitive perceptions might help.

Context

You product team delivers 10-30 features per quarter across a handful of development teams. Cross-team priorities are unclear, and PM are beginning to struggle about how to collaborate across teams. They have different intuitions about what matters.

Discussion

Using a structured approach to align their intuitive perceptions might help. First written about by Don Reinertsen in his excellent book Principles of Product Development Flow, the Weighted Shortest Job First approach was later popularized by Dean Leffingwell as part of the Scaled Agile Framework. SAFe prescribes this technique for all companies. But I found it didn't work well in a larger, low-trust organization. Stakeholders didn’t trust our value estimates and weren’t willing to accept our priorities.

If you have a good relationship with your stakeholders and can meet with them closely, a structured prioritization approach based on relative scoring can help.

Play

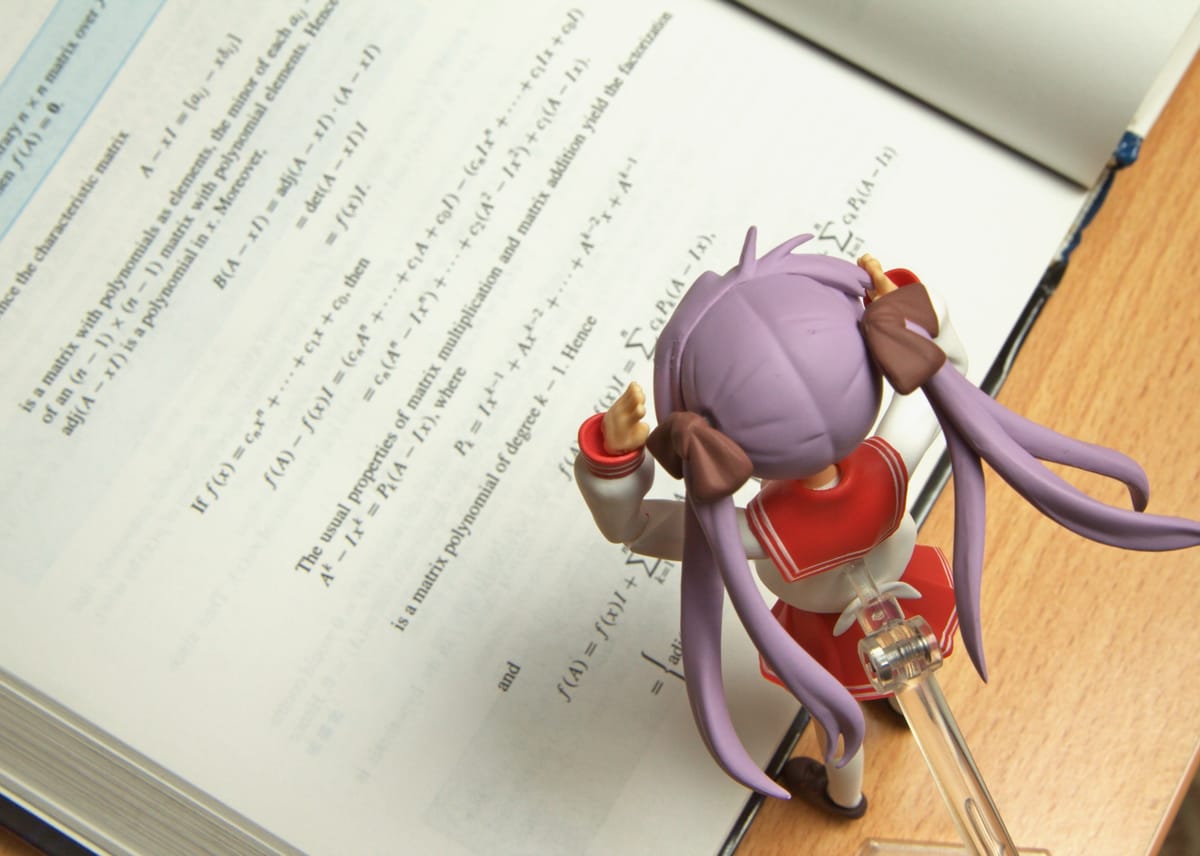

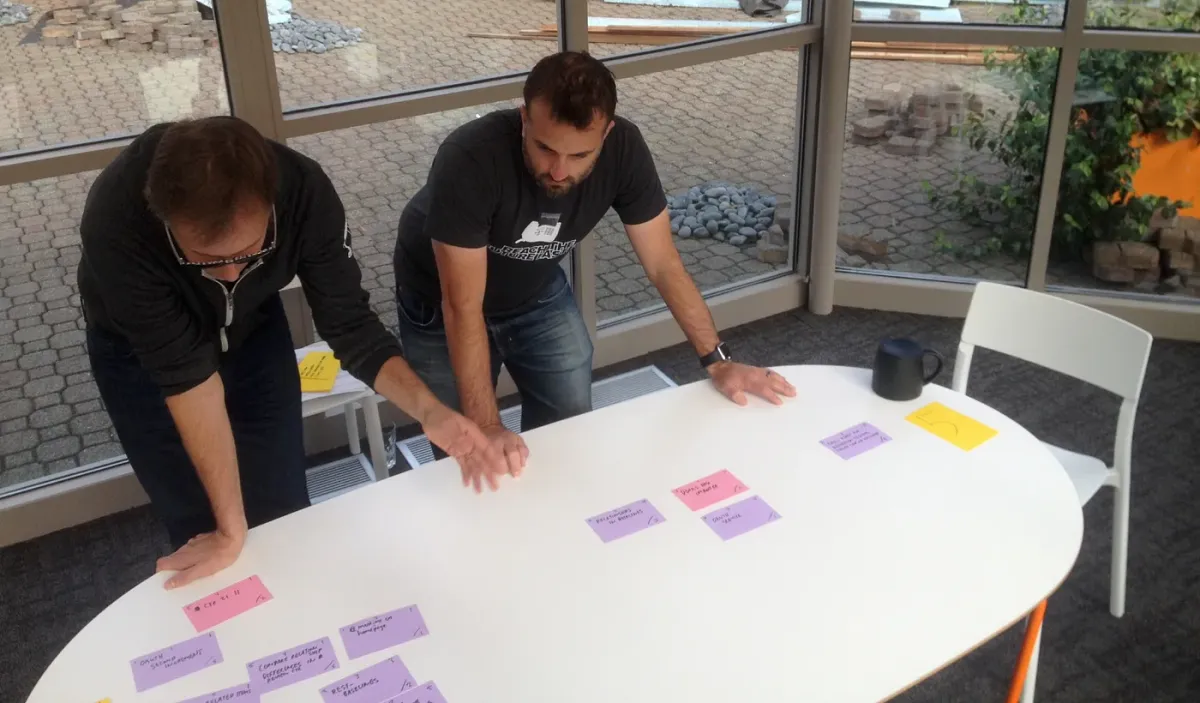

Lay out your 25 or so features and capabilities on cards on a table, or on a Miro board. We're going to arrange them several different times, based on different dimensions. Do this collaboratively with several PMs. Feel free to include some tech leads and a few stakeholders - this helps build buy-in for the scores.

Money

First, arrange them based on direct business value - lowest value on the left, highest value on the right. Ask about revenue, potential sales gains, or cost savings. Then, using a spreading scale (i.e. 0, 1,2, 4, 8, 16) form clusters. Discuss any disagreements. Note the scores on the upper, left corner of the cards.

Users

If you're a B2B business where the buyer is different then the user, do another round for direct end user value. Try to make it proportionate to the business value score. If an '16' for business value was 10M in new revenue, the '16' for end user value should be a similarly large impact for users. Add this to the first number.

Indirect

Then, do another round to estimate risk reduction and opportunity enablement. These are the items that put us in a better position for the future without providing any direct revenue benefit. Some items have information discovery value and deserve a bonus here.

Time Criticality

Most items have a linear time criticality curve - that is, the sooner you get them, the better. These have a time criticality score of 0. But some items have expiration dates - if you don't do them by a certain date, their value disappears. (Consider hitting a market window or a launch aligned with a public event). When I worked in music tech hardware, any new hardware had to be delivered in the early autumn or it would be too late to hit the holiday season. A release in December or January was believed to be a big waste. Some items have fixed dates (like legal compliance). And other items, like paying down technical debt, can increase in cost exponetially the longer you wait.

I encourage people to default to a 0 for time criticality unless there is truly something special about the urgency of a particular item. (Chris Matts would disagree with me here!)

Job Size

If two jobs have equal cost of delay, we should do the shortest job first, in order to get value sooner. So we have to estimate relative job size. It is nice to have tech leads do this, but I find PM estimates of job size for features are often 'good enough' to create a rough scoring.

Add it up

Here's an example:

Advanced Search

(Business Value 0, User Value 8, Indirect 0, Time Criticality 0)

/ Size 4

= WSJF Score 2

Cut Build Time

(Business Value 0, User Value 0, Indirect 4, Time Criticality 0)

/ Size 1

= WSJF Score 4

(Reducing build time often pays off, even compared to an 'important' feature because it creates more development capacity.)

Cautions / Caveats

The benefit of WSFJ is mostly in the way it uncovers assumptions among the people doing the scoring. You're taking intuition and structuring it so you can talk about it. But it's only really legible to the people who participate in the scoring.

So it can be absolutely great for helping 5-8 PMs decide on the priorities and how to know who wins in the case of a resource conflict. You can do this activity every month or two and solve an alignment problem.

Outsiders, however, will see your weird 'scores' and wonder where these magic numbers came from. In certain companies you'll be able to invite your stakeholders to join in the process. But it's hard to explain. I recommend keeping this one inside of your team, or maybe sharing with engineering. But don't show your scores to the CEO - like velocity or story points, it's more likely to lead to confusion.

You can do this with much larger lists of items - a few weeks back, we tried with 50 items and a group of 10. A big group can score a large set of cards pretty fast, but individuals will have less confidence in the scores because they only got to examine 10-15 cards during the exercise. In this case, make sure people know they'll have a chance to examine and adjust the scores later.

Prioritization is an emotional process in many companies, driven by the impulses, intuitions, and even whims of leaders. Beginning to bring some rationality into the discussion can feel threatening to PMs who 'know' that 'someone is just going to tell them what to do anyway'. It's not unusual to encounter resistance when you introduce methods like this that require people to articulate their rationale. This resistance is normal and part of the process to moving towards healthier product management.

Alternative Prioritization Plays